Music, Mind and Machine: Official Research Group Projects and Descriptions

Music, Mind and Machine: Official Research Group Projects and Descriptions

The following is a list of our current projects. Click on a project to view more detailed information.

|

Time-Critical Networks for Interaction Design |

|

|

Professor Barry Vercoe Computer games and learning environments increasingly involve humans relating to computational avatars and robots. Intelligence-modeling software requires real-time interaction between the parts, with channel capacities that must match the best of human-human performance. Many multi-modal activities challenge the real-time communication and comprehension speeds between participants. This project aims to enhance human-machine and machine-machine communication capacities between entities in order to encourage new models of interaction. |

|

Classification of Killer-Whale Sounds with GMM and HMM |

|

|

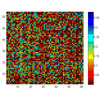

Professor Judy Brown The automatic classification of marine mammal sounds is very attractive as a means of assessing massive quantities of recorded data, freeing humans and offering rigorous and consistent output. Calculations on a set of vocalizations of Northern Resident killer whales using dynamic time warping have been reported recently. Since this method requires the time-consuming pre-processing measurement of frequency contours, we have explored the use of Gaussian Mixture Models (GMM) and Hidden Markov Models (HMM). These methods can be applied directly to time-frequency decompositions of the recorded signals. Calculations have been made on a set of 75 calls previously classified perceptually into 7 call types. Preliminary results give an agreement of roughly 85% with the perceptual classification for GMM and over 90% for HMM. |

|

Sound Design with Everyday Words |

|

|

Mihir Sarkar, Cyril Lan, Joe Diaz, Yang Yang, Professor Barry Vercoe Musicians and music listeners often describe the quality of musical sounds with words like "bright" or "warm", "metallic" or "fat". Our project investigates how these verbal descriptors, or perceptual tags, relate to the perception of timbre. We are finding whether people use a common terminology to describe timbre or if their choice of words is linked to their musical or cultural background, and how specific words correlate with particular audio features. We are currently analyzing results from an online survey where more than 1200 participants described the sounds they heard. Our objective is to design an audio processing engine that can dynamically retrieve sounds in a database derived from a user's profile and verbal description, and that can modify sounds by using words instead of numerical parameters. |

|

TABLANET: Real-Time Music Performance for Networked Indian Percussion |

|

|

Mihir Sarkar, Professor Barry Vercoe Multitrack recording enabled musicians to play together without being present in the same room at the same time. Now network music performance technologies allow musicians who are located in different spaces to play live with each other. To minimize the effects of latency, some systems rely on audio streaming over high-speed networks like Internet2, but this imposes limits on the allowable distance between musicians or on the tempo of the music; others employ audio looping but this constrains the musical style. For playing music of a highly rhythmic nature requiring tight synchronization over regular Internet or wireless networks across continents we propose TablaNet, a real-time online musical collaboration system for the tabla, a pair of Indian hand drums. The system employs a novel approach that combines machine listening and music prediction to overcome latency. |

|

Cross-Cultural Music Transformation |

|

|

Cheng Zhi Anna Huang, Professor Barry Vercoe It takes us years to learn our own musical tradition, and it is rare to find people who have become musically multilingual. However, by learning to compose in different cultural styles we can expand our compositional palette and communicate more effectively across cultural boundaries. We are designing a computer-assisted compositional tool that can assist composers to begin composing melodies in other cultural styles by dynamically analyzing the musical context and presenting melodic materials from various cultures as musical analogies. These melodic patterns address questions such as: In a target cultural style, how does one develop a musical idea? What are the idiomatic melodic progressions? How does one establish a structural pitch? What are the possible continuations to an unfinished melody and how does one cadence? This tool makes it more accessible for composers to transform and render their musical ideas in other musical languages. |

|

Musicpainter |

|

|

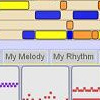

Wu-Hsi Li, Professor Barry Vercoe Musicpainter is a networked graphical composing environment that encourages sharing and collaboration within the composing process. It provides a social environment where users can gather and learn from each other. The approach is based on sharing and managing music creation in small and large scales. At the small scale, users are encouraged to begin composing by conceiving small musical ideas, such as melodic or rhythmic fragments, all of which are collected and made available to all users as a shared composing resource. The collection provides a dynamic source of composing material that is inspiring and reusable. At the large scale, users can access full compositions that are shared as open projects. Users can listen to and change any piece if they want. The system generates an attribution list on the edited piece and thus allows users to trace how a piece evolves in the environment. |

|

Musicscape |

|

|

Wu-Hsi Li, Professor Barry Vercoe Nowadays, MP3 players can easily store thousands of songs. However, having more choices do not necessarily lead us to enjoy more music, because a large portion of music is lost when users are asked to name the music before they can hear it. Sometimes we do not know what we really want, but when the right music comes along the answer is so obvious we can hardly miss it. This is the moment we want to capture. Musicscape is an experimental music navigating interface which creates serendipitous musical encounters. Users walk around the music space in order to meet the right tunes. Moreover, the music space can be further developed into a sociable medium, where users encounter songs and their audience at the same time. |